Say the words “artificial intelligence,” and most people think of Alexa and Siri. Others might think of movies like The Terminator or 2001: A Space Odyssey.

The truth is that artificial intelligence (AI) isn’t a far-off science fiction concept. In fact, it’s everywhere around us—think of Netflix recommending your next TV show or Uber optimizing the best route home.

AI is a sophisticated ecosystem of modern, interconnected technologies that’s evolved over decades—and continues to evolve today. So it should be no surprise that the history of AI is also complex and multilayered. It’s a history that features a variety of changing tools and capabilities that have built up AI as we know it to its current state.

To understand the importance of AI today—and to prepare for tomorrow—it helps to understand where AI started and how it’s evolved to become the game-changing technology it is today.

20th-century AI: An ambitious proof of concept

Modern AI was born in the academic chambers of elite university research departments, where scholars thought deeply about the future of computing. But its early years left it confined to these chambers, stranded thanks to a lack of data and computing power.

In 1956, Dartmouth College hosted the Dartmouth Summer Research Project on Artificial Intelligence—a workshop that came to be known as a critical first step into the academic research of AI. During the workshop, 20 researchers aimed to prove the hypothesis that learning could be described so precisely “that a machine can be made to simulate it.”

A year later, in 1957, American psychologist Frank Rosenblatt expanded on the Dartmouth research with perceptron, an algorithm that could successfully perform binary classification. This was where we began to see promising evidence of how artificial neurons could learn from data.

And another year later, John McCarthy, an attendee at the Dartmouth Summer Research Project on Artificial Intelligence, and numerous students from MIT, developed Lisp (a new programming language). Decades later, McCarthy’s research would help bring new and even more exciting projects to life, including the SHRDLU natural language program, the Macsyma algebra system, and the ACL2 logic system.

As we look back at these early experiments, we can see AI taking its first, shaky steps from the world of high-minded research into the practical world of computing.

The year 1960 saw the debut of Simulmatics, a company that claimed it could predict how people would vote based on their demographics.

In 1965, researchers developed so-called “expert systems.” These systems allowed AI to solve specialized problems within computer systems by combining a collection of facts and an inference engine to interpret and evaluate data.

Then, a year later, in 1966, MIT professor Joseph Weizenbaum designed a pattern-matching program called Eliza that showed users that AI was intelligent. Users could give information to the program, and Eliza, acting as a psychotherapist, would serve them an open-ended question in response.

By the mid-1970s, governments and corporations were losing faith in AI. Funding dried up, and the period that followed became known as the “AI winter.” While there were small resurgences in the 1980s and 1990s, AI was mostly relegated to the realm of science fiction and the term was avoided by serious computer scientists.

In the late 1990s to early 2000s, we saw at-scale application of machine learning techniques like Bayesian methods for spam filtering by Microsoft and collaborative filtering for Amazon recommendations.

21st-century AI: Wildly successful pilot programs

In the 2000s, computing power, larger datasets, and the rise of open-source software allowed developers to create advanced algorithms that would revolutionize the scientific, consumer, manufacturing, and business communities in a relatively short time. AI has become a reality for many businesses today. McKinsey, for instance, has found 400 examples where companies are currently using AI to address business problems.

The web offers new ways to organize data

The web revolution that swept the world in the early to mid-2000s left the world of AI research with some important changes. Foundational technologies like Extensible Markup Language (XML) and PageRank organized data in new ways that AI could use.

XML was a precondition for the semantic web and for search engines. PageRank, an early innovation from Google, further organized the web. These advancements made the web more useable and made large swaths of data more accessible to AI.

At the same time, databases were getting better at storing and retrieving data, while developers were working on functional programming languages that made it easier to operate that data. The tools were present for researchers and developers to further AI technology.

Neural networks and deep learning demonstrate potential of AI

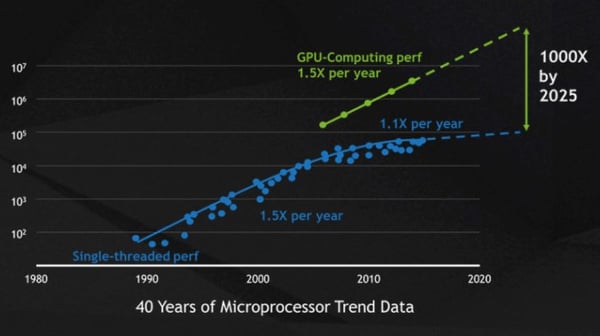

There were big dreams for AI in the 20th century, but computing power had made them nearly impossible to build. By the 21st century, however, computers were becoming exponentially more powerful at storing, processing, and analyzing large volumes of data. This meant the lofty goals of neural networks and deep learning could become a reality.

Researchers developed datasets that were specifically suited for the training of machines, resulting in neural networks like AlexNet. Beforehand, machine training relied on datasets that numbered in the tens of thousands, but the advance of graphics processing units (GPUs) meant new datasets could number in the tens of millions.

Computer chip manufacturer Nvidia launched their parallel computing platform, CUDA, in 2006. Inside this platform, Nvidia used GPUs to make their computing faster. This performance boost helped more people run large and complex machine learning models written in machine learning libraries like like TensorFlow and PyTorch.

Down the road, these libraries would become open source and inspire widespread experimentation as the technology became more accessible. This democratization of AI would help exciting new tools like AlphaGo, Google DeepMind, and IBM Deep Blue get off the ground.

Computer vision opens the door to new industry applications

Up until the 2000s, AI was only really useful if you were processing text. But at the turn of the century, advancements in computer vision, which allowed computers to recognize and interpret images, pushed the use cases of AI to new heights.

This time, our pioneers weren’t academics. Instead, they lived inside your home and made cleaning (the Roomba) and gaming (the XBox Kinect) easier than ever before and put computer vision in homes across the world.

We also saw computer vision used in emerging self-driving cars and in hospitals to automatically detect conditions like lesions and pneumonia.

Beyond industry-specific use cases, the variation of computer vision also helped ignite the advancement of robotic process automation (RPA). 🎉 Supplemented with optical character recognition (OCR), RPA robots can process both structured and unstructured data, which has changed the world of data analysis as we know it.

Data analytics improves AI business applications

The past two decades have shown us that automation and AI can measure up against complex business use cases. And as AI gets even better at analyzing data, companies can leverage AI even more to help them work smarter and more efficiently.

Banks are using AI to classify customer queries into different categories from large volume of unstructured emails received annually. This process is manually intensive or generates poor results when using rule based key word classification. AI allows banks to classify these emails with high levels of accuracy and reduce average handling time (AHT).

AI and automation aren’t just helping financial services companies, either. Healthcare payers are accelerating identification of high-risk pregnancies. The software robot loads verified patient data and accesses a predictive model to score the patient for risks, determines the appropriate care management plan. The results are 24% increase the number of low birth-weight pregnancies that were identified accurately while avoiding 44% of low birth-weight pregnancies—altogether saving $11 million annually. Read the full story.

Natural language processing and voice recognition boosts usability of AI

Though AI started with the analysis of text, it’s by no means mastered it. Up until recently, text—even with OCR—needed to be structured in machine-readable formats. The field of natural language processing (NLP) has pushed the cutting edge on the ability to program computers to understand natural language.

One of the better known examples of NLP is Generative Pre-trained Transformer 3 (better known as GPT-3). GPT-3, introduced in May 2020, uses deep learning to generate text that closely resembles human-made text. Already, interesting applications for GPT-3 have emerged, such as writing articles (The Guardian, for instance, tasked GPT-3 with writing an article about the harmlessness of robots) and generating computer programs.

The applications of NLP extend beyond GPT-3. NLP can be used to make text from speech, automatically summarize the meaning of a span of text, translate text across language, and more.

Though NLP often exists on the cutting edge, it’s also made its way into our homes. Virtual assistants like Alexa and Google, for instance, can process natural language requests and translate them into executable commands. With a simple voice request, these AI assistants can search for information; route commands to smart devices, such as lights or locks; and more.

The future of AI: An enterprise game-changer

As we move into the next decade of technology maturity, the enterprise use cases for AI will only continue to grow. Past tools have laid the foundation for what’s possible with AI, but there’s still lots of ground to be broken by taking these tools to scale.

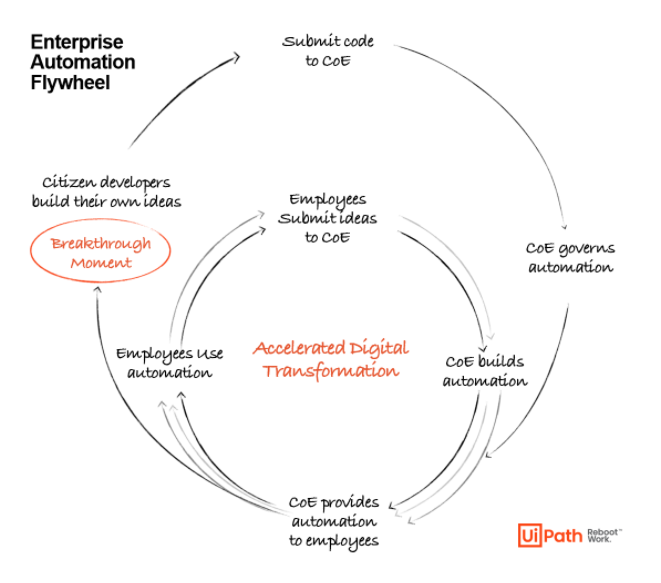

As AI advances even more, we’ll see companies leverage RPA, machine learning, process mining, and data analytics to create a powerful, end-to-end suite of automation as these technologies become more and more accessible to businesses at all maturity stages. AI will no longer be the exclusive domain of researchers and developers; everyday users, aided by modern tools, will be able to create AI-based solutions to the problems they identify.

As the technology gets more accessible to business users, we’ll see the flywheel of automation spin up to provide companies with more and more ideas and possibilities for AI applications. These possibilities will be supported by cutting-edge automation platforms and tools that will reboot and revolutionize how we work.

UiPath AI Fabric makes it easy for users to launch a model, pump data into it, get insights, and assess usefulness.

AI today: The right moment to get started

It’s time to automate. We’ve reached the stage in AI evolution where it isn’t theoretical—it is imperative and is predicted to unlock hundreds of billions of dollars in value for companies that embrace it.

Join us at the virtual Reboot Work Festival 2020 to celebrate what automation has made possible and learn more about how organizations and the people who support them can use AI to enable a new era of work.