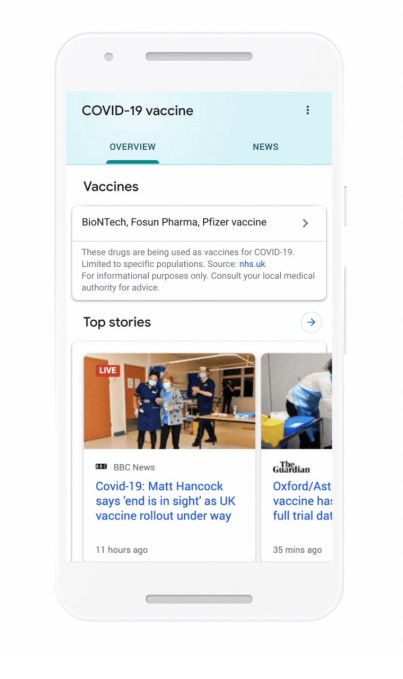

Google announced today it’s introducing a new search feature that will surface a list of authorized vaccines in users’ location, as well as informational panels about each individual vaccine. The feature is first being launched in the U.K., which earlier this month gave emergency authorization to the BioNTech/Pfizer coronavirus vaccine. The company says the feature will roll out to other countries as their local health authorities authorize vaccines.

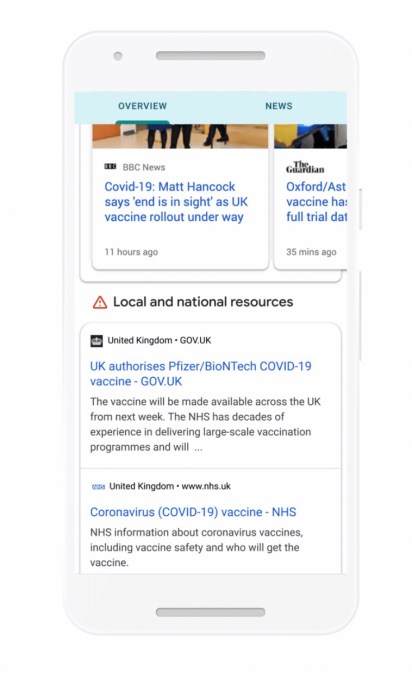

The feature itself will appear at the top of Google.com searches for Covid-19 vaccines and will present the authoritative information in a box above the search results, linking to the health authority as the source. The panel will also have two tabs. One will be the overview of the vaccine, which appears above Top Stories and links to Local and National resources, like government websites. The other will organize news related to the vaccine under a separate section.

Image Credits: Google

Google positioned the new search panels as one way it’s helping to address vaccine misinformation and hesitance at scale.

However, another arm of the company, YouTube, allowed Covid-19 misinformation and conspiracies to spread during the pandemic. While YouTube in April banned “medically unsubstantiated” content after earlier banning conspiracies that linked Covid-19 to 5G networks, it didn’t ban misinformation about Covid-19 vaccines until October. In other words, it didn’t proactively create a policy to ban all aspects of Covid-19 misinformation, but waited to address the spread of Covid-19 antivax content until vaccine approvals appeared imminent. This meant that any clips making false claims — like saying vaccines would kill their recipients, cause infertility, or implant microchips –were not officially covered by YouTube’s policies until October.

And even after the ban, YouTube’s moderation policies were found to miss many anti-vaccination videos, studies found.

This is not a new challenge for the video platform. YouTube has struggled to address antivax content for years, even allowing videos with prohibited antivax content to be monetized, at times.

Image Credits: Google

Today, Google downplayed YouTube’s issues in its fight against misinformation, saying that its Covid-19 information panels on YouTube which offer authoritative information have been viewed over 400 billion times.

However, this figure provides provides at look into the scale at which YouTube creators are publishing videos about the pandemic, often with just their opinions.

Google said, to date, it has removed over 700,000 videos related to dangerous or misleading Covid-19 health information. If the platform was regulated, however, it would not be entirely up to Google to decide when a video with dangerous information should be removed, what constitutes misinformation, or what the penalty against the creator should be.

The company also noted that it’s now helping YouTube creators by connecting them with health experts to make engaging and accurate content for their viewers, and donated $250 million in Ad Grants to help over 100 government agencies run PSAs about Covid-19 on the video platform. In April, Google donated $6.5 million to support COVID-19 related fact-checking initiatives, as well, and is now donating $1.5 million more to fund the creation of a COVID-19 Vaccine Media Hub.

[ad_2]

Source link