Since widespread protests over racial inequality began, IBM announced it would cancel its facial recognition programs to advance racial equity in law enforcement. Amazon suspended police use of its Rekognition software for one year to “put in place stronger regulations to govern the ethical use of facial recognition technology.”

But we need more than regulatory change; the entire field of artificial intelligence (AI) must mature out of the computer science lab and accept the embrace of the entire community.

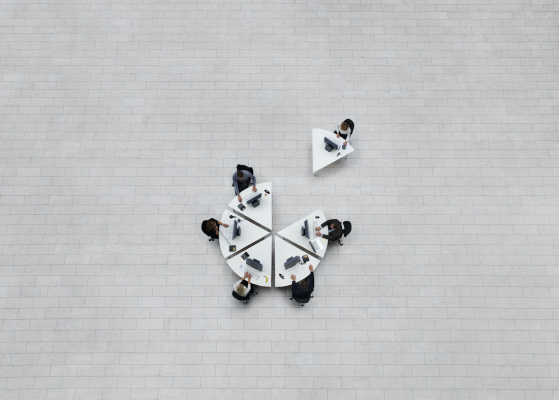

We can develop amazing AI that works in the world in largely unbiased ways. But to accomplish this, AI can’t be just a subfield of computer science (CS) and computer engineering (CE), like it is right now. We must create an academic discipline of AI that takes the complexity of human behavior into account. We need to move from computer science-owned AI to computer science-enabled AI. The problems with AI don’t occur in the lab; they occur when scientists move the tech into the real world of people. Training data in the CS lab often lacks the context and complexity of the world you and I inhabit. This flaw perpetuates biases.

AI-powered algorithms have been found to display bias against people of color and against women. In 2014, for example, Amazon found that an AI algorithm it developed to automate headhunting taught itself to bias against female candidates. MIT researchers reported in January 2019 that facial recognition software is less accurate in identifying humans with darker pigmentation. Most recently, in a study late last year by the National Institute of Standards and Technology (NIST), researchers found evidence of racial bias in nearly 200 facial recognition algorithms.

In spite of the countless examples of AI errors, the zeal continues. This is why the IBM and Amazon announcements generated so much positive news coverage. Global use of artificial intelligence grew by 270% from 2015 to 2019, with the market expected to generate revenue of $118.6 billion by 2025. According to Gallup, nearly 90% Americans are already using AI products in their everyday lives – often without even realizing it.

Beyond a 12-month hiatus, we must acknowledge that while building AI is a technology challenge, using AI requires non-software development heavy disciplines such as social science, law and politics. But despite our increasingly ubiquitous use of AI, AI as a field of study is still lumped into the fields of CS and CE. At North Carolina State University, for example, algorithms and AI are taught in the CS program. MIT houses the study of AI under both CS and CE. AI must make it into humanities programs, race and gender studies curricula, and business schools. Let’s develop an AI track in political science departments. In my own program at Georgetown University, we teach AI and Machine Learning concepts to Security Studies students. This needs to become common practice.

Without a broader approach to the professionalization of AI, we will almost certainly perpetuate biases and discriminatory practices in existence today. We just may discriminate at a lower cost — not a noble goal for technology. We require the intentional establishment of a field of AI whose purpose is to understand the development of neural networks and the social contexts into which the technology will be deployed.

In computer engineering, a student studies programming and computer fundamentals. In computer science, they study computational and programmatic theory, including the basis of algorithmic learning. These are solid foundations for the study of AI – but they should only be considered components. These foundations are necessary for understanding the field of AI but not sufficient on their own.

For the population to gain comfort with broad deployment of AI so that tech companies like Amazon and IBM, and countless others, can deploy these innovations, the entire discipline needs to move beyond the CS lab. Those who work in disciplines like psychology, sociology, anthropology and neuroscience are needed. Understanding human behavior patterns, biases in data generation processes are needed. I could not have created the software I developed to identify human trafficking, money laundering and other illicit behaviors without my background in behavioral science.

Responsibly managing machine learning processes is no longer just a desirable component of progress but a necessary one. We have to recognize the pitfalls of human bias and the errors of replicating these biases in the machines of tomorrow, and the social sciences and humanities provide the keys. We can only accomplish this if a new field of AI, encompassing all of these disciplines, is created.

[ad_2]

Source link